Details

Description

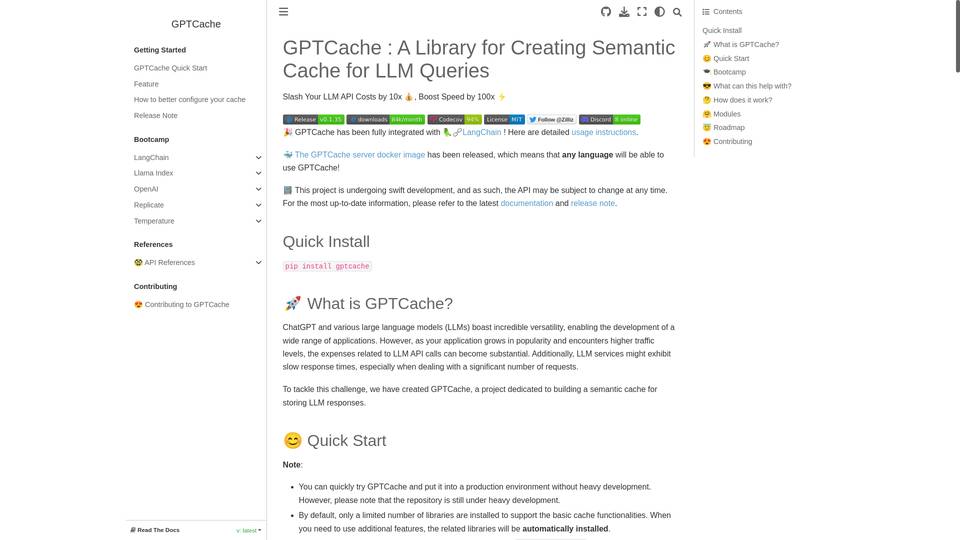

GPTCache is an AI tool that allows developers to create a semantic cache for storing responses from large language models (LLMs) such as ChatGPT. It is designed to reduce expenses and improve performance when using LLM APIs. By caching query results, GPTCache reduces the number of requests and tokens sent to the LLM service, resulting in decreased costs. It also improves response time by fetching similar queries directly from the cache instead of interacting with the LLM service. In addition, GPTCache provides an easy-to-use development and testing environment, allowing developers to mock LLM data and test their applications without connecting to the LLM service. It also ensures scalability and availability by enabling applications to handle a higher volume of queries and avoiding rate limits imposed by LLM services. Overall, GPTCache is a powerful tool for optimizing the usage of LLM APIs and improving the efficiency of LLM-based applications.